It’s no secret that the top hyper-casual games got to where they are today and turned a significant profit by A/B testing. Whether optimizing your monetization strategy, improving your game design, or giving your ASO a boost, A/B tests help you uncover opportunities that give your entire game a better chance for success - and making that success last longer. Some of the top benefits of testing include:

- Measuring the impact of any change to game design

- Predicting ARPU and retention uplift

- Preventing damage to your game’s KPIs

- Learning about how your users interact with your game and monetization strategy

But this article isn’t just about all of the advantages of A/B testing. Instead, Maayan Aharonovitch, Product Monetization Lead at Supersonic, is sharing her top 5 A/B tests you should be running to optimize your entire hyper-casual game. Take a look below and integrate these into your testing strategy so you can be sure you’re maximizing each part of your game’s performance.

1. Interstitials

Interstitial ads are the highest-earning ad unit in the hyper-casual market. But many developers are so afraid that these ad units negatively affect user experience that not all of them are taking advantage of A/B testing them. A/B testing addresses these concerns because it lets you find the sweet spot of maximizing LTV by taking into consideration the impact on both ad impressions and in-game metrics like retention, playtime, and - most importantly - APPU.

There are four types of interstitials tests, in particular, that you should run:

- Trigger point: Serving an interstitial ad before vs. after the end of the level. Most hyper-casual games work best with showing the ad at the end of the level, but some puzzle games can achieve an ARPU uplift by showing one before the level ends

- Timing: The duration between ads (e.g. 20, 30, or 40 seconds)

- Levels: The level you start showing ads - from level 1, 2, 7, etc.

- Mid-level: Showing ads in the middle of a level. Best-suited for games with level length greater than 45 seconds

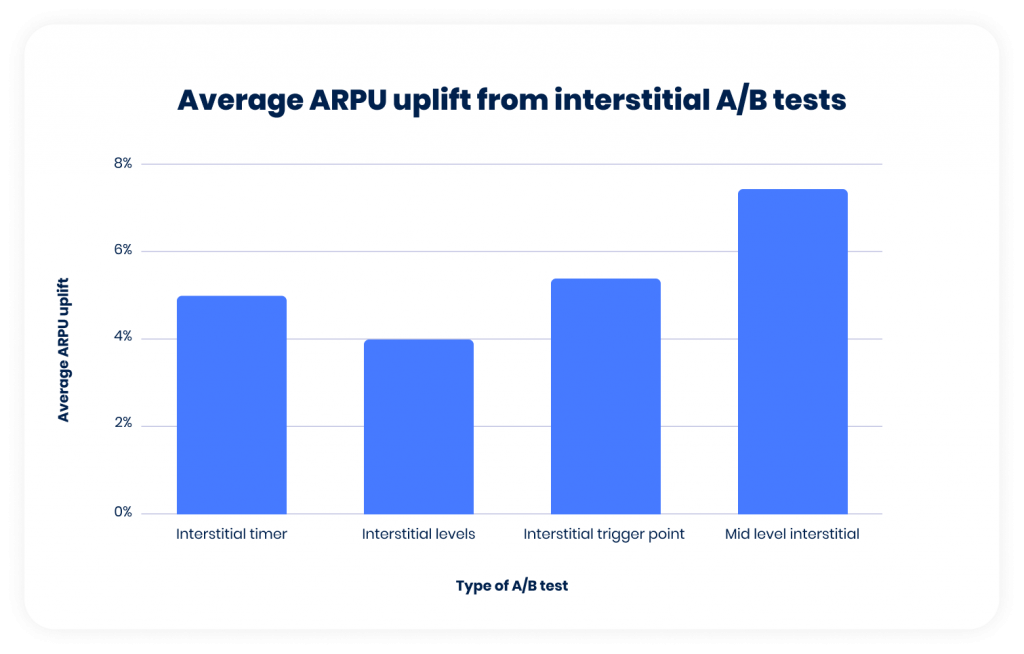

Optimizing your interstitial ad strategy can help boost ARPU. For example, for each type of A/B test we ran in our titles, we found the average increase in ARPU to be:

- 5.5% for testing the trigger point

- 5% after testing timing

- 4% for level testing

- 7% after mid-level testing

2. UI

Like we mention in this blog about art design tips, a simple and clear UI is essential both for helping users understand your game and for improving your monetization strategy. For example, a good UI can encourage more engagement with rewarded videos by showcasing the offer more effectively, leading to an increase in ARPU.

A/B testing UI is an often overlooked strategy because many developers focus instead on fine-tuning the game mechanic or core gameplay instead. But don’t forget to focus on UI in addition to your gameplay.

For the game Human Vehicle, for example, running an A/B test on the UI to rearrange the formatting, size, and appearance of the buttons on-screen led to a 20% increase in D7 retention and 5% uplift in ARPU D7. Once implemented in a new version and launched to all users, ARPU D7 rose by 9%.

3. Sound FX

Hyper-casual users are diverse - they represent a wide range of ages. While older audiences may play without sound, we’re seeing that many younger users are doing the opposite. Sound effects are an excellent way to engage those who are playing with their sound on, and the metrics prove it. Implementing sound effects has led to an average ARPU uplift of 7%-10% across our games. Since confirming the effectiveness of sound effects in testing, we’re now suggesting developers include it as a default feature in their games.

Using Human Vehicle as an example again, A/B testing sound effect implementation led to D1 retention increasing by 12.9% and an ARPU uplift of 10.2%.

You can try A/B testing sound effects in your creatives, too, like the one for Color Match featuring a talking tomato. Testing this audible element helped the game generate significant profit and scale to reach #1 overall on iOS in the US

4. Leaderboard

A leaderboard at the end of each level shows users their ranking compared to other “players”. It’s often populated with fake names but still creates that feeling of accomplishment and satisfaction - users get to see their progression even more clearly, which leads to higher retention. And as they play for longer, there are more opportunities for monetizing so ARPU increases, too. Any hyper-casual game can benefit from this feature - confirm it’s the right fit by A/B testing a leaderboard in your game and seeing if it improves in-game metrics and revenue.

For Escalators, A/B testing a leaderboard led to an ARPU uplift of 9%. This along with other A/B tests, such as adding more lanes to the road and changing the fail conditions, helped boost KPIs like APPU and retention while maintaining a low CPI so the game could scale profitably and efficiently.

5. Environments

The environment in hyper-casual games composes all the visual elements that make up the background and player zone (where the gameplay happens). This visual aspect is very important because gameplay tends to be more simple and consistent throughout the levels than other genres. Art design is a key way to make your game feel more dynamic - even if it’s simple, you can create the feeling of progression by changing the environment. For example, something as simple as going from a sea background to a sand background in Human Vehicle helped users stay engaged and feel a sense of accomplishment, which led to an increase in both D1 retention and ARPU.

And as a different example of implementation, Going Balls tested new environments as bonus levels that users could play by engaging with rewarded video or purchasing. This benefitted both retention and monetization, leading to a 5% uplift in ARPU.

A/B testing new environments was one of the first tests that we recommended to developers. It proved to be so effective that we now suggest it as a default setup - you should build your game with different environments from the start. Then be sure to still A/B test which types of backgrounds and at which levels yield the best results according to KPIs like retention and ARPU. A great place to start is using the environments from your winning creatives to inspire the background for a level (or set of levels) in your game because it’s likely that these will perform well.

Just keep testing

A/B testing is crucial during soft launch, and continuing to test even after global launch can help you optimize and sustain performance. For Going Balls, we recently A/B tested 40 new video creatives showing a more challenging (booster) level with a different environment. Over a year after launch, these changes improved revenue and profit. They also revived the game in the charts - it reached the top 12 on Android in the US and achieved over 2.6M DAUs.

Once you find the winner of each of your tests, implement it in a new version of your game, then wait 7 - 30 days to measure the impact on retention and ARPU. It should reflect the positive results from testing and move the needle in the right direction for your game’s performance.

Let's put these tips to good use

Publish your game with Supersonic